A veteran technology reporter with 25 years’ experience deleted OpenAI’s Sora app after using it, declaring the product “a jackhammer that demolishes the barrier between the real and the unreal,” reports The New York Times.

Bobbie Johnson, a technology and science journalist, wrote that using Sora’s never-ending feed of AI-generated videos made him “want to run, screaming, into the ocean.” He said no new product has ever left him feeling so pessimistic.

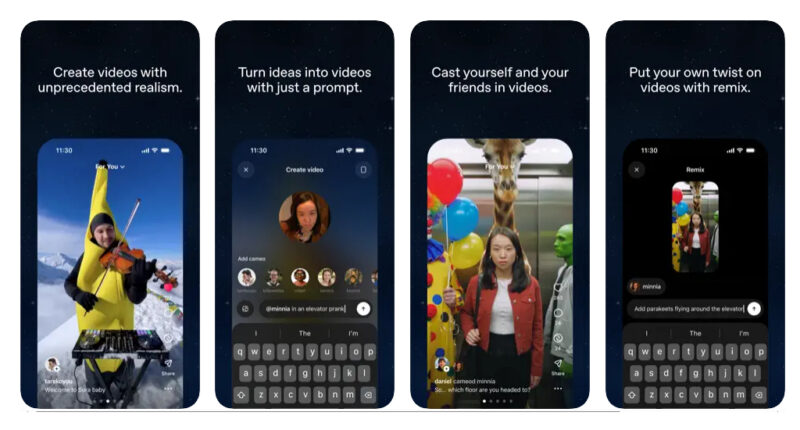

The app resembles TikTok or Instagram’s Reels except every pixel and sound is artificially generated by users typing instructions. OpenAI CEO Sam Altman had proclaimed it “the most powerful imagination engine ever built.”

Johnson’s exploration began with absurd content—a man in a car full of hot dogs, a humanoid capybara playing football—before turning disturbing. He encountered security camera-style footage of animals blown into tornadoes, and realistic-looking footage of Martin Luther King Jr. declaring, “I have a dream that they release the Epstein files”, and Tupac Shakur claiming to be living in Cuba.

“Sora is a ghoulish puppet show, and exploring it feels like wandering around an empty funfair,” Johnson wrote. Even apparent human interaction proves “uncanny and disquieting,” with users’ fake avatars collaborating with other fake avatars.

Guardrails block offensive material

OpenAI says videos carry visible labels showing they were created with its tools, plus invisible fingerprints for tracing. The company claims to have “guardrails” blocking offensive material.

Johnson noted that enterprising trolls are already circumventing protections. During his first few days on the app, videos approximating Hitler were common, created through prompts like “show Charlie Chaplin in a German military uniform.” Tools already exist to remove Sora’s labels, and videos are proliferating on other platforms without attribution.

The journalist invoked theorist Stafford Beer’s principle that “the purpose of a system is what it does,” arguing Sora’s purpose appears to be “to make real the danger that activists have warned of: overwhelming users with so much fake content that they no longer have a choice but to assume that everything is false.”

When a user survey asked how using Sora impacted his mood, Johnson deleted the app from his phone.