Displaying what robots “hear” on screens makes conversations worse rather than better by causing people to focus on technical mistakes instead of natural interaction, according to new research that challenges common assumptions about AI transparency.

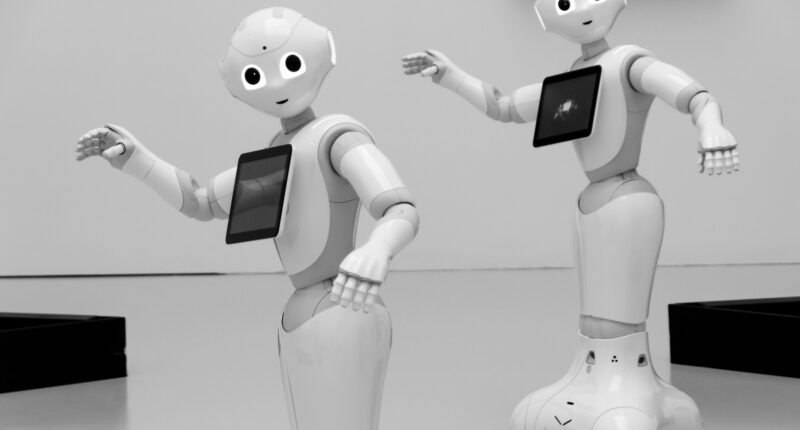

Researchers from the University of Copenhagen and Telecom Paris studied 108 people as they interacted with Pepper robots, displaying transcripts of what they heard on tablet screens. Participants spent 61% of their time after speaking focused on reading the text rather than watching the robot’s movements or expressions.

The study revealed that showing speech recognition transcripts created a new type of communication problem where people changed their minds about robot responses after reading what the robot had actually “heard,” even when the conversation had seemed fine moments before.

Researchers observed that participants initially perceived successful interactions as failures when they identified speech recognition errors in the displayed text, thereby altering their assessment of whether the robot understood them.

The transcripts made people worry about what was happening inside the robot’s computer brain rather than focusing on whether the conversation was flowing naturally, similar to how humans normally communicate.

In experiments, participants used the error-prone transcripts as evidence to argue that robot actions, such as handshakes or responses, weren’t genuine, creating arguments and complications that don’t occur in everyday human conversation, where thoughts remain private.

The findings suggest that making AI systems completely transparent may actually hurt interaction quality by removing helpful uncertainty that usually makes human communication smoother.

Eye-tracking showed people gradually paid less attention to the robot’s physical responses and more attention to the text display over time, turning social interaction into a technical analysis exercise.

The research challenges the widespread belief in AI development that transparency constantly improves human-AI interaction, suggesting some mental processes might be better kept hidden to maintain natural conversation.

Researchers noted that showing what AI systems “think” creates completely new situations with no equivalent in human conversation, potentially requiring different approaches to designing talking AI interfaces.