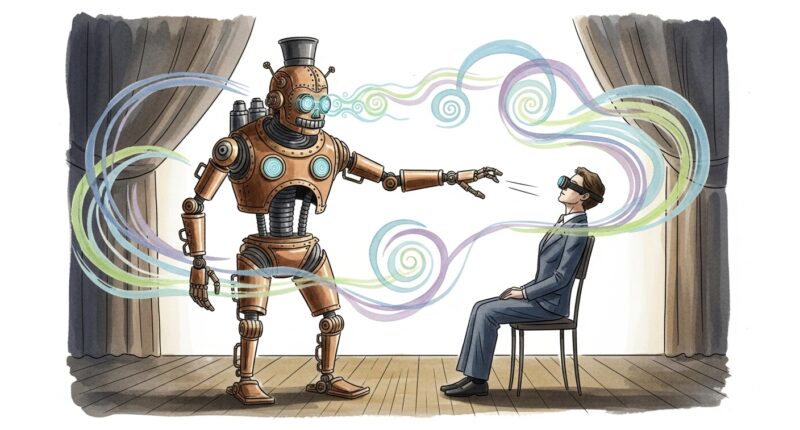

Artificial intelligence models are wasting vast amounts of energy to perform “mind reading” tasks that the human brain handles efficiently by age four, according to new research from the Stevens Institute of Technology.

The study, published in Nature Partner Journal on Artificial Intelligence, found that while humans can process complex social cues in seconds using a small subset of neurons, AI models must activate their entire neural network to achieve the same result.

Researchers examined how Large Language Models (LLMs) encode “Theory of Mind” (ToM), the cognitive capacity to infer and reason about the mental states of others.

“When we, humans, evaluate a new task, we activate a very small part of our brain, but LLMs must activate pretty much all of its network to figure something new even if it’s fairly basic,” said Denghui Zhang, Assistant Professor in Information Systems and Analytics at the School of Business. “LLMs must do all the computations and then select the one thing you need. So you do a lot of redundant computations, because you compute a lot of things you don’t need. It’s very inefficient.”

Thoughts and beliefs

The researchers found that LLMs use a specialised set of internal connections to handle social reasoning. This ability depends strongly on how the model represents word positions, particularly through a method called “rotary positional encoding” (RoPE). These connections guide the model’s focus when reasoning about thoughts and beliefs.

“In simple terms, our results suggest that LLMs use built-in patterns for tracking positions and relationships between words to form internal ‘beliefs’ and make social inferences,” Zhang said.

The team aims to utilise these findings to enhance the scalability of AI models by emulating the human brain’s efficiency.

“We all know that AI is energy expensive, so if we want to make it scalable, we have to change how it operates,” said Zhaozhuo Xu, Assistant Professor of Computer Science at the School of Engineering. “Our human brain is very energy efficient, so we hope this research brings us back to thinking how we can make LLMs to work more like the human brain, so that they activate only a subset of parameters in charge of a specific task.”