Engineers have solved one of the biggest frustrations with wearable technology: gesture control that fails the moment you start moving. A new AI-powered system from the University of California San Diego can reliably read everyday gestures, even when the user is running, riding in a car, or on turbulent ocean waves.

The breakthrough, published in Nature Sensors, could soon allow patients in rehabilitation or individuals with limited mobility to use natural gestures to control robotic aids. It could also enable industrial workers and first responders to use hands-free control of robots in hazardous environments.

Wearable gesture sensors typically work well when a user is still, but the signals “start to fall apart” under excessive motion noise.

“Our system overcomes this limitation,” said study co-first author Xiangjun Chen, a postdoctoral researcher at UC San Diego. “By integrating AI to clean noisy sensor data in real time, the technology enables everyday gestures to reliably control machines even in highly dynamic environments.”

Motion and muscle sensors

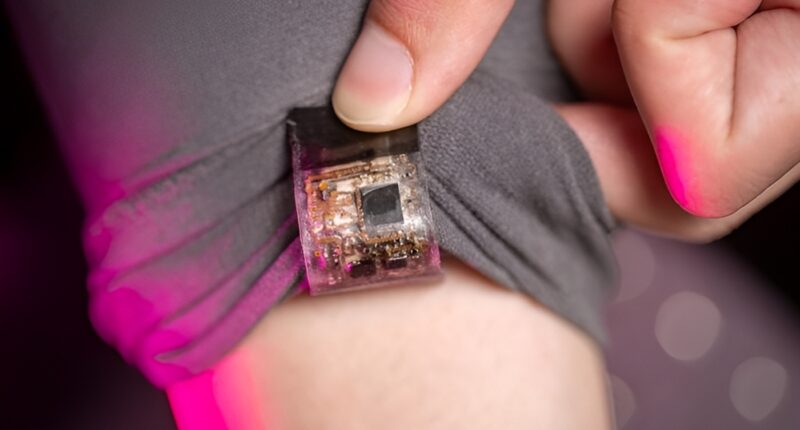

The device is a soft electronic patch on a cloth armband that integrates motion and muscle sensors, a Bluetooth microcontroller, and a stretchable battery. A customised deep-learning framework strips away interference from sensor signals, interprets the gesture, and transmits a command to a machine, such as a robotic arm, in real-time.

To the researchers’ knowledge, this is the first wearable human-machine interface that works reliably across such a wide range of motion disturbances.

The system was validated under multiple dynamic conditions. Subjects used the device to control a robotic arm while running, while exposed to high-frequency vibrations, and under simulated ocean conditions at the Scripps Ocean-Atmosphere Research Simulator. In all cases, the system delivered accurate, low-latency performance.

“This advancement brings us closer to intuitive and robust human-machine interfaces that can be deployed in daily life,” Chen said. “It paves the way for next-generation wearable systems that are not only stretchable and wireless, but also capable of learning from complex environments and individual users.”