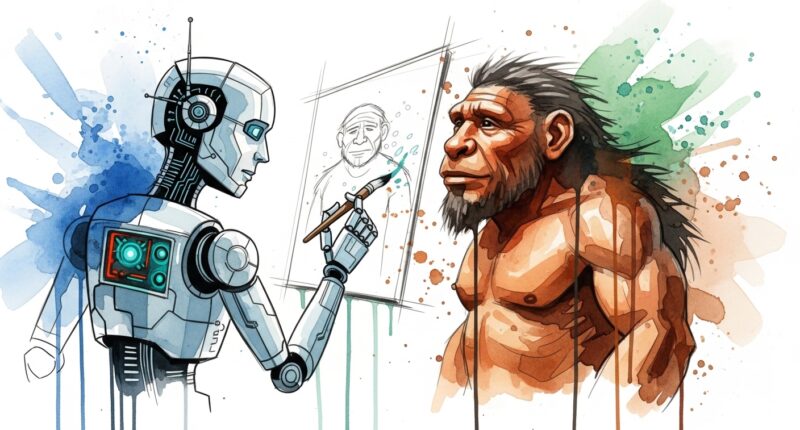

If you ask an artificial intelligence model to show you a Neanderthal, it will likely produce an image based on Victorian-era stereotypes rather than modern science, according to new research.

A study from the University of Maine and the University of Chicago found that, despite being at the cutting edge of technology, generative AI tools often rely on outdated scholarly research to form their answers.

In a paper published in the journal Advances in Archaeological Practice, researchers Matthew Magnani and Jon Clindaniel found that when asked to depict the daily life of Neanderthals, ChatGPT produced narratives consistent with 1960s anthropology, while DALL-E 3 generated images consistent with scientific understanding from the late 1980s.

“It’s broadly important to examine the types of biases baked into our everyday use of these technologies,” says Magnani, an assistant professor of anthropology. “Are we prone to receive dated answers when we seek information from chatbots, and in which fields?”

Stooped men and anachronisms

To test the systems, the researchers ran four different prompts 100 times each using DALL-E 3 for images and ChatGPT (GPT-3.5) for text.

The visual results depicted Neanderthals as they were imagined more than a century ago: primitive, stooped creatures covered in body hair, looking more like chimpanzees than humans.

The study also noted a significant gender bias; the AI-generated images almost entirely excluded women and children.

The text narratives fared little better, often underplaying the species’ cultural sophistication. About half of the narratives generated by ChatGPT failed to align with contemporary scholarly knowledge, a figure that rose to over 80 per cent for one specific prompt.

The AI also hallucinated technologies that were far too advanced for the era, including metal, glass and thatched roofs.

The researchers argue that this “accuracy gap” is largely due to copyright laws. Because copyright restrictions established in the 1920s limited access to scholarly research until the open access movement began in the early 2000s, AI models are often trained on older, out-of-copyright material or incomplete datasets.

“One important way we can render more accurate AI output is to work on ensuring anthropological datasets and scholarly articles are AI-accessible,” says Clindaniel.