Mobile robots depend on knowing their exact location to navigate autonomously. But when satellite-based navigation signals fail — such as indoors or near tall buildings — or when a robot is unexpectedly moved, they can become completely lost.

In robotics, this is known as the “kidnapped robot” problem: a scenario where a machine loses knowledge of its initial pose after being displaced, powered off, or moved.

To solve this, researchers at the Miguel Hernández University of Elche (UMH) in Spain have developed a new artificial intelligence system that helps robots regain their bearings in large, ever-changing spaces.

The study, published in the International Journal of Intelligent Systems, introduces a framework called MCL-DLF (Monte Carlo Localization – Deep Local Feature).

Thinking like a human

The system mimics how humans orient themselves in unfamiliar places.

“This is similar to how people first recognise a general area and then rely on small distinguishing details to determine their precise location,” explained lead author Míriam Máximo, whose work was directed by UMH researchers Mónica Ballesta and David Valiente.

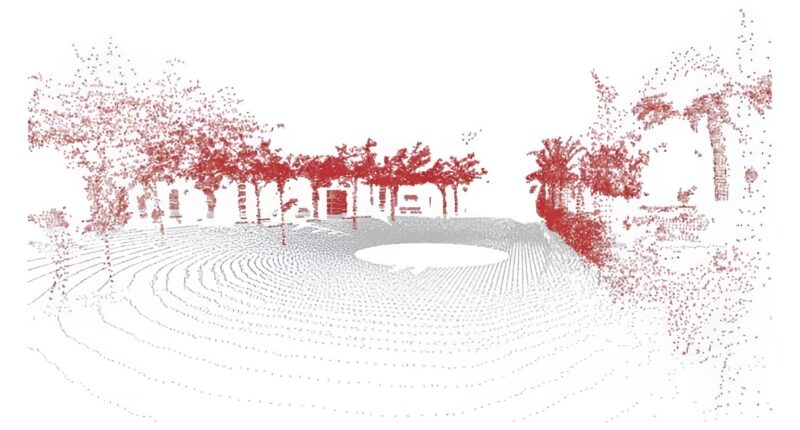

Using 3D LiDAR point clouds, the robot first performs a “coarse” localisation step by identifying large, global structural features like buildings or vegetation. Once it has narrowed down its general region, the system analyses finer, detailed local features to pinpoint its exact position and orientation.

To avoid getting confused in visually similar environments, the system uses deep learning. Instead of relying on predefined rules, the AI automatically learns which environmental characteristics are most informative for localisation. These learned features are then combined with probabilistic Monte Carlo Localization, which maintains multiple hypotheses about the robot’s pose and updates them as new sensor data arrives.

Adapting to the seasons

One of the biggest challenges for outdoor robots is that environments change over time. Vegetation grows, seasons shift, and lighting fluctuates, all of which can significantly alter an area’s appearance and confuse traditional navigation systems.

However, the UMH researchers validated their system over several months on their Elche campus — in both indoor and outdoor scenarios — and found it remained highly robust against seasonal and structural changes.

The team reported that the MCL-DLF system achieved higher position accuracy than conventional approaches while maintaining comparable or superior orientation estimates and lower temporal variability.

The breakthrough brings machines a step closer to fully autonomous navigation without the need for external positioning infrastructure, paving the way for more reliable service robots, autonomous vehicles, and automated logistics.