Researchers from MIT and the MIT-IBM Watson AI Lab have developed a training method that teaches vision-language models to locate personalised objects, addressing a fundamental weakness in current AI systems.

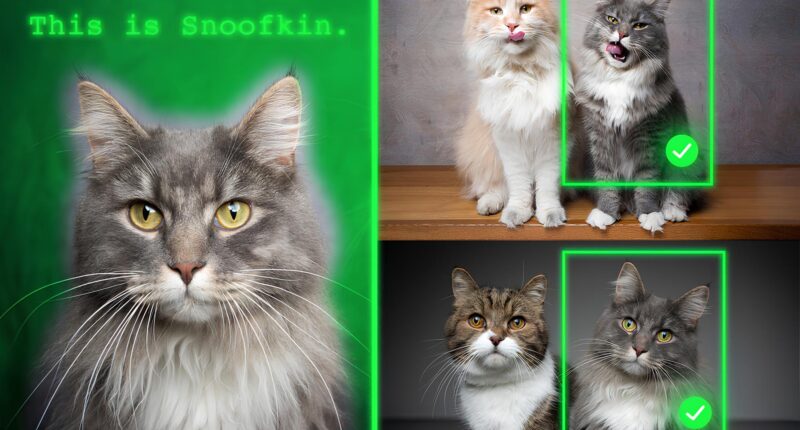

Vision-language models like GPT-5 excel at recognising general objects such as dogs, but perform poorly when asked to identify a specific pet among other animals. The new technique uses video-tracking data showing the same object across multiple frames, forcing models to rely on contextual clues rather than memorised knowledge.

The researchers structured their dataset so models must focus on visual context to identify personalised objects. They discovered that models attempt to bypass this challenge by using pretrained knowledge, prompting the team to employ pseudo-names in the training data. Instead of labelling a tiger as “tiger”, they used names like “Charlie” to prevent the model from relying on previously learned associations.

“It took us a while to figure out how to prevent the model from cheating. But we changed the game for the model. The model does not know that ‘Charlie’ can be a tiger, so it is forced to look at the context,” says Jehanzeb Mirza, an MIT postdoc and senior author of a paper on this technique.

Models retrained with the new dataset improved accuracy at personalised localisation by approximately 12 per cent on average. When combined with pseudo-name labelling, performance gains reached 21 per cent. Larger models showed greater improvements.

The technique maintains the model’s general capabilities whilst adding personalised object recognition. Potential applications include tracking specific items over time, ecological monitoring of animal species, and assistive technologies for visually impaired users.

The research revealed an unexpected finding: vision-language models do not inherit in-context learning capabilities from their base large language models, despite theoretical expectations. The team plans to investigate why this capability fails to transfer between the two model types.

The work will be presented at the International Conference on Computer Vision.