Researchers from the University of Sheffield and the Alan Turing Institute have developed a framework for building AI that learns from multiple types of data beyond vision and language. Their study reveals that 88.9 per cent of current multimodal AI research remains limited to these two data types, despite the technology’s potential to tackle complex real-world challenges.

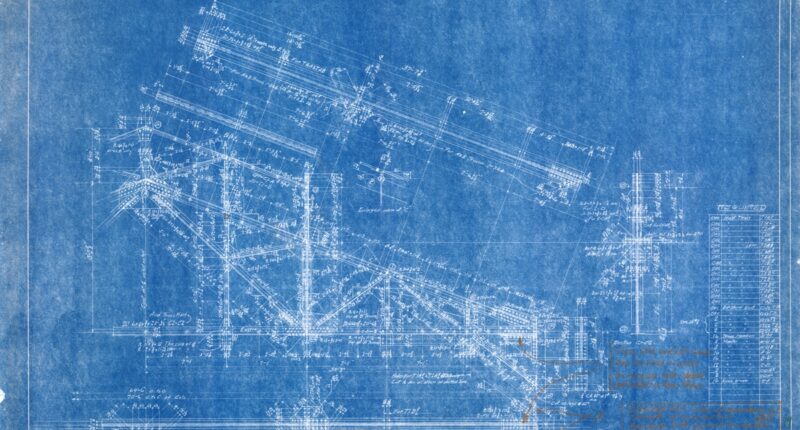

The blueprint, published in the journal Nature Machine Intelligence, provides a roadmap for creating multimodal AI systems that learn from different types of data such as text, images, sound and sensor readings. The framework could lead to AI that is better at diagnosing diseases, predicting extreme weather and improving the safety of self-driving cars.

The study found that despite the advantages of multimodal AI, most systems and research still mainly learn from vision and language data. An analysis showed 88.9 per cent of papers featuring AI that draws on exactly two different types of data posted on arXiv, a leading open repository for computer science preprints, in 2024 involved vision or language data.

“AI has made great progress in vision and language, but the real world is far richer and more complex,” said Professor Haiping Lu, who led the study from the University of Sheffield’s School of Computer Science and Centre for Machine Intelligence. “To address global challenges like pandemics, sustainable energy, and climate change, we need multimodal AI that integrates broader types of data and expertise.”

Lu added that “the study provides a deployment blueprint for AI that works beyond the lab — focusing on safety, reliability, and real-world usefulness”.

Safer conditions for cars

Combining visual, sensor and environmental data could help self-driving cars perform more safely in complex conditions, while integrating medical, clinical and genomic data could make AI tools more accurate at diagnosing diseases and supporting drug discovery.

The research illustrates the new approach through three real-world use cases: pandemic response, self-driving car design and climate change adaptation. The work brings together 48 contributors from 22 institutions across the UK and worldwide.

“By integrating and modelling large, diverse sets of data through multimodal AI, our work together with Turing collaborators is setting a new standard for environmental forecasting,” said Dr Louisa van Zeeland, research lead at the Alan Turing Institute. “This sophisticated approach enables us to generate predictions over various spatial and temporal scales, driving real-world results in areas from Arctic conservation to agricultural resilience.”

The work originated through collaboration supported by the Alan Turing Institute, via its Meta-learning for Multimodal Data Interest Group led by Professor Lu. The collaborative foundation also helped inspire the vision behind the UK Open Multimodal AI Network, a £1.8 million EPSRC-funded network now led by Professor Lu to advance deployment-centric multimodal AI across the UK.