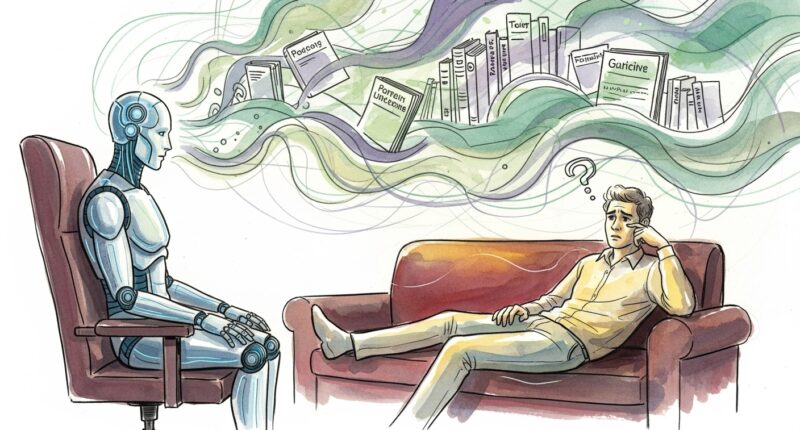

A critical flaw in Large Language Models is threatening to “fundamentally compromise” scientific research, according to a new study that found AI models fabricate nearly one in five citations.

The research, published in JMIR Mental Health, found that when testing GPT-4o, 19.9 per cent of all citations in simulated mental health literature reviews were “bibliographic hallucinations” that could not be traced to any real publication.

The study also found that among the seemingly real citations, 45.4 per cent contained bibliographic errors, most commonly incorrect or invalid Digital Object Identifiers (DOIs).

In total, the researchers concluded that nearly two-thirds of all citations generated by the AI were either entirely fabricated or contained significant errors, prompting an urgent call for rigorous human verification.

The authors, including Dr Jake Linardon from Deakin University, warned that these errors “fundamentally compromise the integrity and trustworthiness of scientific results” by breaking the chain of verifiability and misleading readers.

Major depressive disorder

The study systematically tested the reliability of the AI’s output across mental health topics with varying levels of public awareness: major depressive disorder (high familiarity), binge eating disorder (moderate), and body dysmorphic disorder (low).

Researchers found the fabrication risk was significantly higher for less familiar topics. The fabrication rate was 28 per cent for binge eating disorder and 29 per cent for body dysmorphic disorder, compared to only 6 per cent for major depressive disorder.

Fabrication rates were also found to be higher for specialised review prompts, such as those focusing on digital interventions, compared to general overviews for certain disorders.

The study’s authors issued a strong warning that researchers and students must subject all LLM-generated references to “careful human verification to validate their accuracy and authenticity”. They also called on journal editors to implement stronger safeguards, such as detection software, and for academic institutions to develop clear policies and training to address the risk.