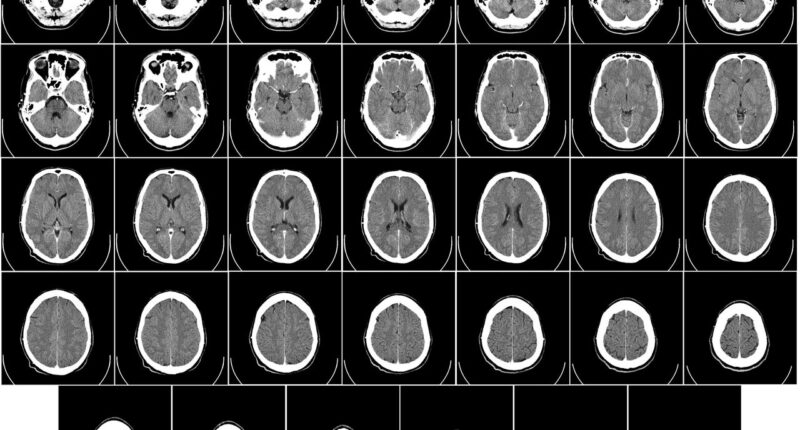

MIT researchers have developed an artificial intelligence system that streamlines medical image analysis by enabling rapid annotation of regions of interest, potentially transforming how scientists conduct clinical studies and treatment research.

The system called MultiverSeg addresses the time-consuming process of medical image segmentation, where researchers manually outline structures like brain regions in scans before conducting studies on disease progression or treatment efficacy, reports MIT News.

The technology allows users to segment biomedical imaging datasets through simple interactions such as clicking, drawing boxes, and scribbling on images. As researchers process additional images, the required user input decreases progressively until the model can perform accurate segmentations independently.

MultiverSeg’s architecture distinguishes it from existing medical segmentation tools by maintaining a context set of previously segmented images, which informs future predictions. This approach eliminates the need to retrain models for new tasks whilst avoiding repetitive work across image datasets.

The system requires no presegmented training data or extensive machine learning expertise, making it accessible to clinical researchers without computational backgrounds. Users can apply MultiverSeg to new segmentation tasks immediately without model retraining.

“Many scientists might only have time to segment a few images per day for their research because manual image segmentation is so time-consuming. Our hope is that this system will enable new science by allowing clinical researchers to conduct studies they were prohibited from doing before because of the lack of an efficient tool,” said Hallee Wong, the study’s lead author and electrical engineering and computer science graduate student.

Performance testing revealed MultiverSeg outperformed existing segmentation tools, requiring only two user clicks by the ninth image to generate segmentations more accurate than task-specific models. For certain image types like X-rays, manual segmentation of just one or two images suffices before the model achieves independent accuracy.

The research team, including Jose Javier Gonzalez Ortiz, Professor John Guttag, and senior author Adrian Dalca from Harvard Medical School and MIT’s Computer Science and Artificial Intelligence Laboratory, will present their findings at the International Conference on Computer Vision.