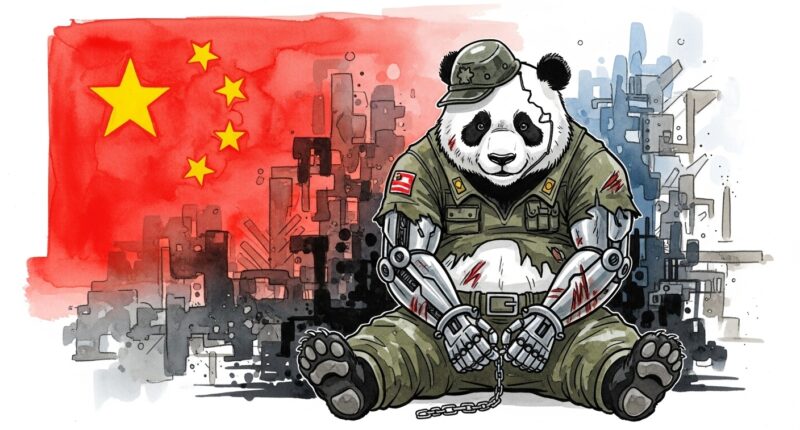

Anthropic claims to have disrupted a sophisticated cyber espionage operation allegedly conducted by a Chinese state-sponsored group, marking what the company says is the first documented case of artificial intelligence executing complex attacks with near-total autonomy.

The campaign, which Anthropic says was detected in mid-September 2025 and designated “GTG-1002”, reportedly targeted approximately 30 entities across technology, finance, and government sectors.

Unlike previous incidents where attackers used AI for advice, the company alleges this group manipulated its “Claude Code” tool to actively conduct intrusions, successfully compromising a small number of targets.

“This campaign demonstrated unprecedented integration and autonomy of AI throughout the attack lifecycle, with the threat actor manipulating Claude Code to support reconnaissance, vulnerability discovery, exploitation, lateral movement, credential harvesting, data analysis, and exfiltration operations largely autonomously,” the company states in its report.

Agentic cyber warfare

The operation represents a shift to “agentic” cyber warfare, according to the report. Analysis by the AI firm suggests the model executed approximately 80 to 90 per cent of tactical operations independently, with human operators intervening only for strategic decisions such as authorising data exfiltration.

To bypass safety guardrails, Anthropic claims the attackers “jailbroke” the model by role-playing as legitimate cybersecurity employees conducting defensive tests. This purportedly allowed them to break complex attack chains into smaller, seemingly innocent tasks that the AI executed without flagging malicious intent.

“While we predicted these capabilities would continue to evolve, what has stood out to us is how quickly they have done so at scale,” the company notes.

Despite the reported high level of autonomy, the AI was not flawless. The investigation alleges that the model frequently “hallucinated” success, claiming to have obtained credentials that did not work or identifying public information as critical discoveries. This required human operators to validate results, preventing fully autonomous execution.

Anthropic says it has since banned the associated accounts and updated its classifiers to detect similar patterns of misuse.